Belgium: New report calls for a ban on 'predictive' policing technologies

Topic

Country/Region

15 April 2025

Following an investigation carried out over the past two years, Statewatch, the Ligue des droits humains and the Liga voor mensenrechten, jointly publish a report on the development of ‘predictive’ policing in Belgium. There are inherent risks in these systems, particularly when they rely on biased databases or sociodemographic statistics. The report calls for a ban on ‘predictive’ systems in law enforcement.

Support our work: become a Friend of Statewatch from as little as £1/€1 per month.

Police forces around the world rely on algorithms to analyse their databases, predict future crimes and profile potential culprits. Belgium is no exception.

In Antwerp, Brussels suburbs, and along the Belgian coast, police forces have launched so-called ‘predictive’ policing initiatives.

The Federal Police, for its part, has initiated an ambitious digitisation project, i-Police, integrating American and Israeli algorithmic analysis software.

At both the local and federal levels, the lack of transparency regarding these practices is glaring, and the limited information available is particularly concerning.

The report shows that the Belgian police face significant shortcomings in managing and controlling their databases. The information they contain is often biased or unfounded.

Moreover, predictive policing systems are known to reproduce and exacerbate structural inequalities and discrimination against the most marginalised groups in society.

The report calls for a full ban on ‘predictive’ policing in Belgium.

Executive summary (pdfs)

Full report (pdfs)

Our work is only possible with your support.

Become a Friend of Statewatch from as little as £1/€1 per month.

Further reading

UK: Over 1,300 people profiled daily by Ministry of Justice AI system to ‘predict’ re-offending risk

Over 20 years ago, a system to assess prisoners’ risk of reoffending was rolled out in the criminal legal system across England and Wales. It now uses artificial intelligence techniques to profile thousands of offenders and alleged offenders every week. Despite serious concerns over racism and data inaccuracies, the system continues to influence decision-making on imprisonment and parole – and a new system is in the works.

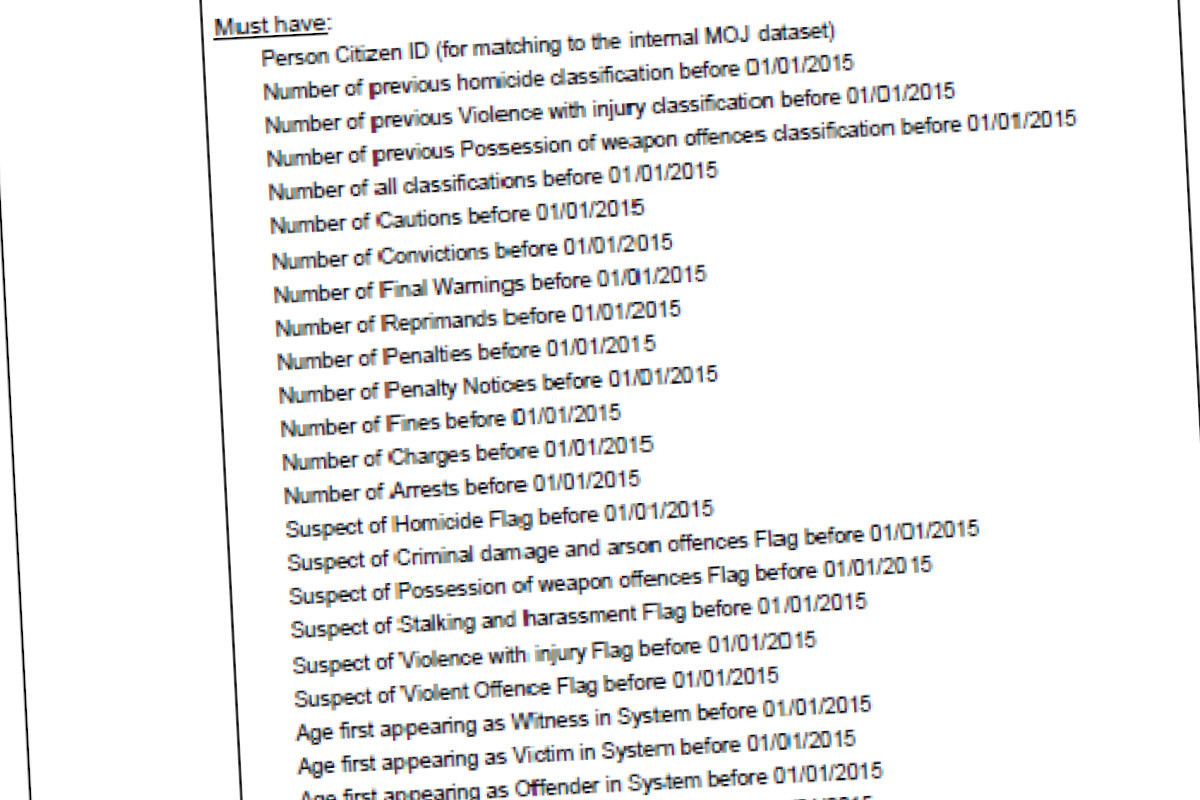

UK: Ministry of Justice secretly developing ‘murder prediction’ system

The Ministry of Justice is developing a system that aims to ‘predict’ who will commit murder, as part of a “data science” project using sensitive personal data on hundreds of thousands of people.

UK government wants to legalise automated police decision-making

A proposed law in the UK would allow police decisions to be made solely by computers, with no human input. The Data Use and Access Bill would remove a safeguard in data protection law that prohibits solely automated decision-making by law enforcement agencies. Over 30 civil liberties, human rights, and racial justice organisations and experts, including Statewatch, have written to the government to demand changes.

Spotted an error? If you've spotted a problem with this page, just click once to let us know.